As web technologies evolve, the same thing should happen with the tools. This post is about the challenges on detecting web software and its versions.

I've been an user of WhatWeb for a long time, even created my own plugins. It has had little activity on its development lastly then I've started to use Wappalyzer but it's passive and lacks some useful features from WhatWeb. Starting a new project where the software and version detection is essential, I see that current solutions don't fit my requirements but I can take some good ideas from them.

I've separated the problem in two parts: first, the challenges detecting software and second, the more complicated issue of detecting the software version. I've named the software which detects web software in a site as detector in this post.

Detecting the software

In the past, a single request was enough to know what software was running on a site and get almost every library reference. However with the extensive use of Javascript it has turned more complex to obtain library references with a single request. You have to also see the new scenario with minifiers, bundlers and obfuscators.

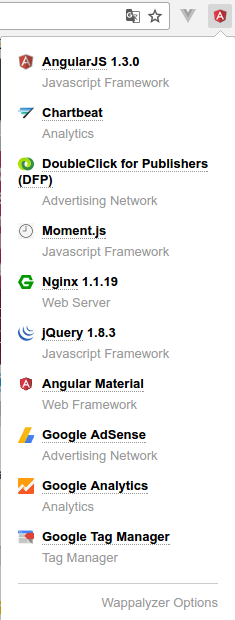

Nowadays a Javascript rendering engine is almost a must in a detector. Without it, you're missing a lot of new sites that use dynamic loading of libraries. Currently, WhatWeb does a straightforward request without interpreting the Javascript response. In example, we can see a detection run against Fayerwayer which is an Angular application:

$ ruby whatweb https://www.fayerwayer.com

https://www.fayerwayer.com [200] Country[CHILE][CL], HTTPServer[nginx/1.1.19], IP[190.13.66.54], UncommonHeaders[access-control-allow-origin], nginx[1.1.19]

The same happens for Wappalyzer as library.

$ node wapp.js

{ Nginx:

{ app: 'Nginx',

confidence: { 'headers Server /nginx(?:\/([\d.]+))?/i': 100 },

confidenceTotal: 100,

detected: true,

excludes: [],

version: '1.1.19',

versions: [ '1.1.19' ] } }

However, it's also a Firefox and Chrome extension and in those cases it does the detection against the rendered HTML given by the browser. That way it detects successfully the software on the site.

Going deep in the subject, minifiers add an extra layer of complexity. Looking at jQuery releases, it seems simple in some cases since there are key strings (in this case comments) that could help to detect a software, but minifiers like Uglify2 have a lot of options then one source code could generate many different minified files. It's an open area to research.

Bundling consists in putting different libraries in just one file. Then having the bundle.js file it could be easy to detect software by searching for key strings but it could even better to split bundle.js and be able to do content comparisons at some level (not identical but some algorithm could assist).

Obfuscators are even harder to deal but they aren't usually used in web applications (I see them in custom applications but not in widely-used libraries).

Detecting the software version

Let's start detecting the software version using the simplest approach: getting the version from the main response. It's possible through many ways:

- Some text indicator (i.e.

powered by A version x.x.x) - Some version argument (i.e.

?ver=x.x.x). - The version as part of the path (i.e.

/sw/x.x.x/script.js).

From our example site we can see that some data could be extracted correctly:

<script src='http://www.espacioculinario.cl/wp-includes/js/jquery/jquery.js?ver=1.12.4'></script>

It uses Jquery version 1.12.4. That was easy but it's not always the case since:

- Nowadays we usually don't find text indicators in common frontend libraries.

- Version argument isn't trustworthy. For instance, in the example site there's a Wordpress plugin called recipe-card.

<script src='http://www.espacioculinario.cl/wp-content/plugins/recipe-card/js/post.js?ver=4.6.1'></script>

Is its version 4.6.1? According to the plugin homepage, its latest release was 1.1.7 but in some installations there's version contamination using the Wordpress version. Then it's not reliable to extract the version from some parameters like ver.

A good idea from WhatWeb is that for some plugins it has MD5 comparisons of the library content, then you get the version with a great percent of preciseness. Obviously it involves an additional request to the library URL.

Tests

I've been working for almost five years at ScrapingHub in several projects involving web scraping and when you develop this kind of software it's very important to have a good testing suite. Let's take as example the plugin eserv from WhatWeb:

Plugin.define "Eserv" do

author "Brendan Coles <bcoles@gmail.com>" # 2012-10-22

version "0.1"

description "Eserv - Mail Server - SMTP/POP3/IMAP/HTTP"

website "http://www.eserv.ru/"

matches [

# Version Detection # HTTP Server Header

{ :search=>"headers[server]", :version=>/^Eserv\/([^\s]+)/ },

# Meta Generator # Version Detection

{ :version=>/<meta name="generator" content="Eserv\/([^\s^"]+)" \/>/ },

# Powered by footer # Version Detection

{ :version=>/<span id='powered_by'>[^<]+<a href="http:\/\/www\.eserv\.ru\/"><span itemprop="name">Eserv<\/span><\/a>\/([^\s]+)/ },

]

end

What happens if the first entry in matches doesn't get the version completely for a site but the second one does? Is it fine to move the second entry to the top to be the first regex to be tried? With 5 sites it's manageable but when the list grows, it's hard to know if with our changes we're affecting the accurate detection in some sites.

Neither WhatWeb nor Wappalyzer have public tests in their repositories and I think they are really important to have consistent results.

Conclusions

After reviewing the state-of-the-art in the field, I would like to introduce detectem, a new application for web software detection in the next post.